The EU AI Act: A Compliance Challenge with Dubious Benefits

The EU AI Act burdens businesses with vague mandates and heavy compliance costs, benefiting big tech while leaving smaller firms scrambling.

The European Union’s Artificial Intelligence Act is poised to impose a significant compliance burden on companies while offering little in the way of clear benefits. As Bloomberg’s Isabel Gottlieb reports, the first provisions of the Act take effect on February 2, including a ban on certain high-risk AI uses and a sweeping requirement that companies train employees on AI literacy. While the law’s intentions might be noble, its ambiguities and burdensome requirements will likely create more confusion than clarity.

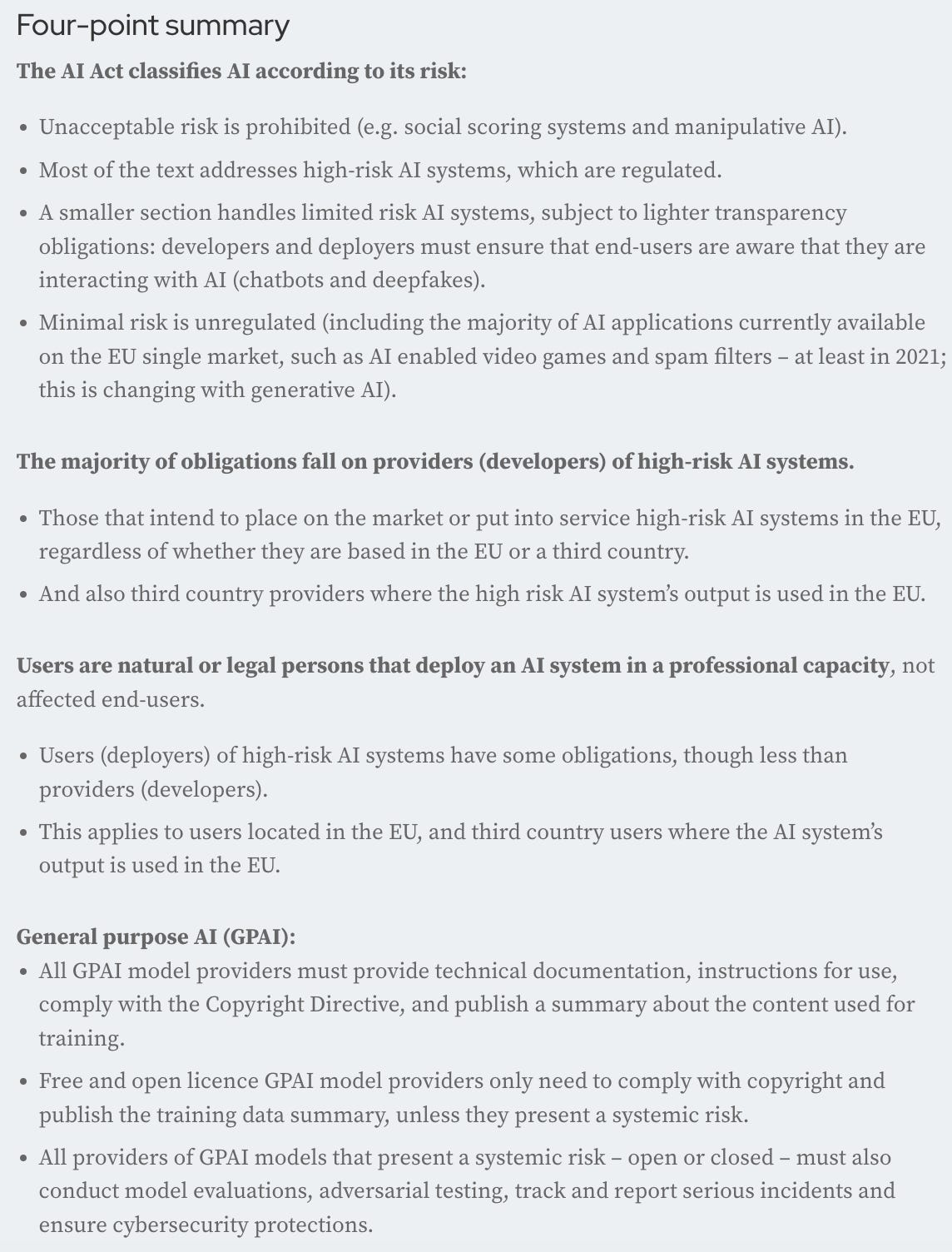

Just look at the “four point” summary of the Act provided by the European Future of Life Institute; that summary itself is eleven sub-bullets of legal and bureaucratic word salad — not through any fault of the Institute summarizing the Act, but instead because the words in the Act all require additional interpretation. Great for lawyers and consultants, not so great for innovation:

Regulation Without Guidance

One of the key concerns companies face is the lack of detailed guidance on how to comply. The European Commission aims to publish clarifying guidelines on prohibited uses, but key threshold questions remain as undefined concepts. As an example, the Act’s ban on AI that exploits vulnerabilities, uses subliminal techniques, or engages in social scoring may sound reasonable, but without clear definitions, companies are left guessing what compliance actually entails.

In fact, that screenshot above showing the “four point summary” is only the beginning, a high level summary of the act weighs in at 2,000+ words. The act itself is 90,000 words, with an additional 50,000 words in an interpretive memo — no small company is reading that, they’re paying lawyers and consultants to develop systems of compliance.

The lack of clarity in the AI Act, along with its massive scope, mirrors previous technology regulations that introduced broad restrictions without providing workable frameworks. The GDPR, for instance, created immense compliance headaches while failing to meaningfully curb data privacy violations from major tech firms. The AI Act appears poised to repeat this cycle, imposing heavy obligations while leaving businesses in regulatory limbo.

A Broad Net Catching Everyone

The Act does not just target AI developers—it applies to any company using AI within the EU. Many U.S. companies will be affected, even if they are not primarily AI companies. This extraterritorial reach, akin to the GDPR’s global impact, forces businesses worldwide to grapple with compliance, regardless of whether they have operations in Europe. Companies must now assess whether their AI applications—such as chatbots or internal HR tools—fall under vaguely defined prohibitions on biometric categorization or emotion recognition (Not sure what “emotion recognition” means? Don’t worry, here are 2,000 words from a law firm helping you figure it out. Although the firm notes that they too aren’t sure what it means, but reach out and they will help you work through it 💰 💶 💸).

The Act’s literacy requirement adds another layer of compliance, applying to all AI providers and deployers without clear implementation guidance. Businesses must ensure a “sufficient level of AI literacy” among employees, yet the law provides little clarity on what this entails. (But don’t worry, law firms are on it, although they, too, note that they aren’t sure what it means). Once again, companies are expected to comply without clear direction, adding to the growing uncertainty surrounding AI governance.

With its broad scope, the AI Act has raised concerns about regulatory overreach, as critics warn that Europe’s AI rules could influence American policymaking and stifle innovation. While some argue that harmonizing global AI standards fosters trust and accountability, others fear that compliance costs will disproportionately burden smaller U.S. firms, potentially forcing them out of the European market or slowing AI advancements. Companies must now navigate this complex regulatory landscape, balancing EU mandates with the need to innovate.

All of this seems to suggest that European bureaucrats are committed to regulating the continent into irrelevance, and dragging non-European companies down with them.

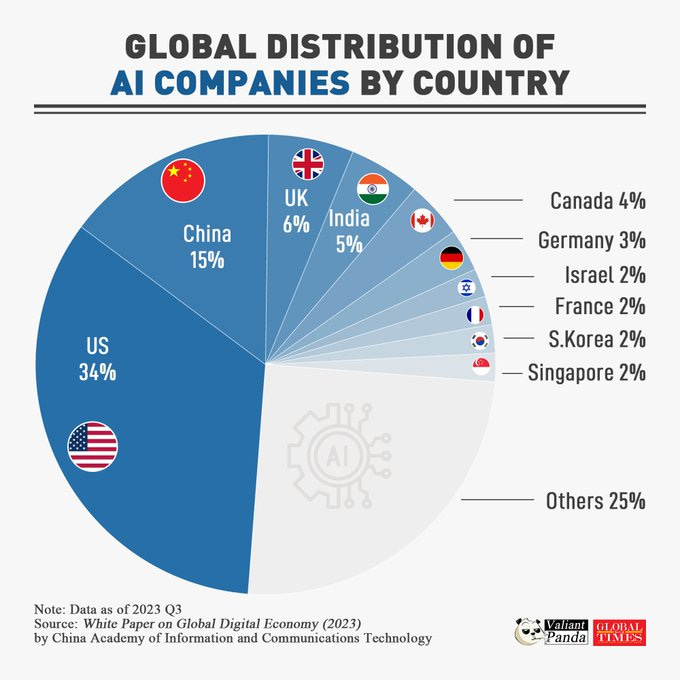

It’s almost axiomatic that the Act will harm non-European companies the most, mainly because Europe doesn’t have many AI companies to begin with:

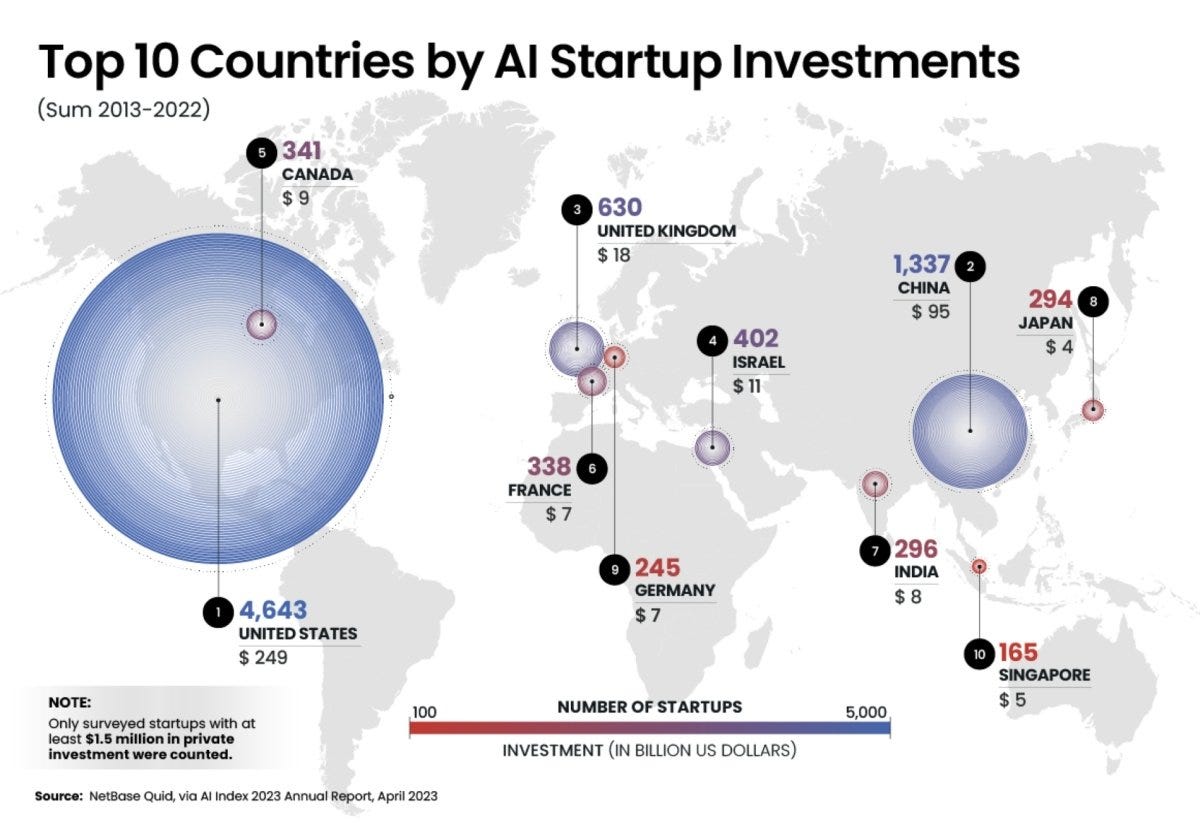

And doesn’t invest much in AI either:

With stats like that, European regulations are, by the numbers, destined to impact non-European companies (by accident or by design).

On this point, AI innovator Ole Lehmann comically notes:

Experts Sound the Alarm

Several experts and commentators share my skepticism about the EU AI Act’s effectiveness and its potential to stifle innovation. AI governance specialist Evi Fuelle warns that companies may be underestimating the detailed documentation required to demonstrate compliance. Meanwhile, Dessislava Savova of Clifford Chance, quoted at Bloomberg, notes that most companies now face an “AI stress test” with little preparation.

Industry voices have long cautioned against overregulation in AI, as excessive regulation risks cementing the dominance of large firms that can afford compliance costs while throttling startups and innovation. The EU AI Act exemplifies this concern: it will disproportionately burden smaller companies that lack the resources to navigate its complexities. One need only look at how Microsoft has quickly leaned into complying with the Act (as they did with GDPR) and how the largest companies are defining the standards for the industry.

A Familiar Pattern of Regulatory Overreach

The EU’s approach to AI regulation follows a predictable pattern of tech policy overreach. Instead of fostering responsible AI development through clear and pragmatic guidelines, lawmakers have prioritized broad prohibitions and punitive measures. The 7% revenue fine for violations mirrors the aggressive penalties under GDPR but ignores the reality that compliance costs alone may be debilitating for many companies.

While the EU frames these measures as protecting consumers and ensuring ethical AI use, history suggests that such regulations often fail to achieve their intended goals. The GDPR did not prevent major data privacy scandals, and there is little reason to believe the AI Act will meaningfully curb problematic AI applications. Instead, it is likely to generate a compliance industry of legal consultants and auditors while leaving businesses struggling to interpret vague mandates.

hits the nail on the head with this comment, describing how smaller European companies are struggling with compliance while larger companies take it in stride:Final Thoughts

The EU AI Act is a case study in how well-intended (I’m not sure they’re well intended, but I’ll give them the benefit of the doubt) regulations can become a bureaucratic nightmare. Without clear guidelines, businesses are forced to operate in uncertainty, risking fines and legal challenges. Worse, the law’s broad scope ensures that even companies with minimal AI engagement will face burdensome compliance requirements.

Rather than imposing sweeping, ambiguous mandates, regulators should work with industry leaders to develop targeted, practical solutions. AI governance should not be about regulatory theater—it should be about fostering innovation while mitigating real risks. The EU AI Act, as it stands, is unlikely to achieve that balance and companies are about to feel the cost of compliance.